Digital engineering is an integrated approach that uses authoritative sources of system data and models as a continuum across disciplines to support lifecycle activities from concept through disposal. In the simplest of terms, it is the act of creating, capturing, and integrating data using models and innovative technology in an orchestrated manner in order to unlock greater value and provide positive impacts on cost and schedule. This integrated approach means that the data from the digital models such as CAD models, electrical circuit models, system models, and software models as well as the domain discipline processes are orchestrated tightly. Hardware, systems, and software engineers are now working much more closely to design and develop systems.

Digital engineering is a movement that takes shape in many industry segments such as medical device development, automotive, defense, consumer electronics, and aerospace to reduce the pains of developing new products. The U.S. Department of Defense (DoD) has a poor track record of fielding new systems on time and within budget. Acquisition teams are mired in antiquated processes and are risk averse to attempt improvements if it causes too much organizational friction.

The DoD has recognized its deficiency and has identified the following challenges with their current acquisition engineering process:

- It has a linear acquisition process that is not agile or resilient

- Stakeholders use stove-piped infrastructures, environments, and data sources to support various activities throughout the lifecycle

- Communication, collaboration, and decisions happen through static disconnected documents and subject to interpretation

- The existing practices are unable to deliver technology fast enough

The Drive for Evolution in Engineering Practices

Kristin Baldwin, Acting Deputy Assistant Secretary of Defense for Systems Engineering, wrote that, “In this era of rapid growth in technology, information, and complexity, we need to evolve our engineering practices. This evolution incorporates advancements in our engineering methodologies (methods, processes, and tools) to enable data-driven decision-making throughout the acquisition lifecycle.”

In 2018 the US Secretary of Defense encouraged everyone to adopt new practices in order to modernize delivered systems and prioritize the speed of delivery. This encouragement was backed by a Digital Engineering Strategy which aims to allow the DoD and industry partners to work more collaboratively at the engineering level.

DoD’s Expected Benefits of Digital Engineering

Expected benefits of digital engineering include better–informed decision making, enhanced communication, increased understanding of and confidence in the system design, and a more efficient engineering process

The Top Five Goals of DoD’s Digital Engineering Strategy

- Formalize the development, integration, and use of models to inform enterprise and program decision making

- Provide an enduring, authoritative source of truth

- Incorporate technological innovation to improve the engineering practice

- Establish a supporting infrastructure and environments to perform activities, collaborate, and communicate across stakeholders

- Transform the culture and workforce to adopt and support digital engineering across the lifecycle

There is a dire need to reduce the red tape without compromising the federal laws of oversight.

Smart Systems Engineers

A systems engineer’s primary job is to work with the end users who are paying for the product. They have “operational requirements” that must be satisfied so that they can meet their mission needs.

The current vision for the past decade has been to leverage Model Based Systems Engineering (MBSE) and use models to analyze and manage those requirements to ensure they are met at the end of the product development.

The systems engineer becomes the translator from the electrical engineers to the mechanical engineers to the computer scientists to the operator of the system to the maintainer of the system to the buyer of the system. Each of these teams speaks a different language.

The idea of using models was a means to provide communication in a simple, graphical form yet, only a tiny percentage of programs have demonstrated MBSE’s utility to achieve this vision. Smart systems engineers today recognize that models need to be expressed in more consumable formats to the broader stakeholder community so that communication and collaboration can take place.

Jama Software’s Digital Engineering Bridge

Jama Connect helps satisfy the DoD Digital Engineering Strategy’s first goal (formalize the development, integration, and use of models to inform enterprise and program decision making) by acting as a digital engineering bridge that connects the model world with the document world.

Time consuming and error prone methods of data consumption via documents, spreadsheets, and legacy requirements tools introduces program risk and increases cycle time to the program timeline.

Jama Connect’s unique user interface (UI) allows non-technical stakeholders to visualize a model of the system of interest and interact with the requirements in familiar views like documents and spreadsheets.

The Benefits

Whether Jama Connect is used in conjunction with MBSE SysML models or used as a standalone digital engineering platform, the system provides:

- Visualization of the shared system model and the status of its artifacts in the development lifecycle

- System and behavioral diagrams

- Faster requirements development

- Verification and validation of requirements developed in the model

- Mechanisms for broader audience communication and participation – tool specialists are not needed

- Baselining of requirements

- Requirements attribute management and workflow

- Requirements decomposition and tracing

- Specialized document generation and data reporting

Using Jama Connect in the application of MBSE to create models is supported by a series of pre-defined views and its underlying relationship ontology which enforces the rigor and consistency demanded by the framework.

Jama Connect can be used in conjunction with dedicated SysML tools or as a standalone system. In fact, the data in Jama Connect is organized much in the same fashion as that in a modeling tool and performs many of the same functions. This is what makes it very attractive to organizations that do not have enough staff trained to use dedicated SysML graphical modeling tools.

What Are Requirements in Jama Connect?

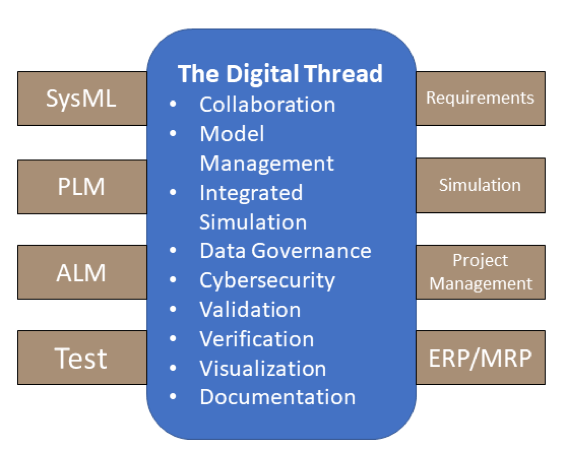

From a big picture perspective, requirements themselves are the digital thread that connects the stakeholders stated needs and constraints to the system, hardware and software design that articulate to developers what must be developed. The notion that a “document” needs to exist for requirements to be captured and managed is a paradigm that the Digital Engineering Strategy is meant to eliminate. Jama Connect and modeling tools maintain requirements as atomic, individual objects that can then be consumed by all engineering disciplines in their own fashion. The overhead of maintaining documents just so they can be communicated to non-technical stakeholders is eliminated.

There is also misconception that requirements engineering is performed only by systems engineers. A more agile approach to requirements engineering is when all stakeholders are validating requirements as they are being written (or as close to real time as possible). But this is difficult since a SysML model requires a specialist with many months of technical training to be able to read and understand. By using Jama Connect instead of documents or legacy requirements tools, the primary means for communication moves away from static and disconnected documents and shifts the paradigm to models and data serving as the basis for connecting traditionally siloed elements — providing an integrated information exchange throughout the lifecycle.

Authoritative Source of Truth?

The DoD has used the phrase ‘single source of truth’ for decades, so much so that it has become the butt of ridicule. Misaligned tools and processes foster data to be stored in documents since that is the only medium that can be shared across vast varieties of stakeholders. This practice has led to: outright errors in data since it is difficult to keep track of the versions of documents; increases to the development timelines in order to just produce “documents” that are polished in formatting more suitable for print purposes; and increases to the overall cost since contractors have to charge for the additional document production time and publishing expertise. It is common for engineers to complain more of their time is spent producing pretty documents than doing engineering work.

The Digital Engineering Strategy is attempting to combat the “where’s the latest document” question by describing in their second goal an authoritative source of truth. “The authoritative source of truth facilitates a sharing process across the boundaries of engineering disciplines, distributed teams, and other functional areas. It will provide the structure for organizing and integrating disparate models and data across the lifecycle. In addition, the authoritative source of truth will provide the technical elements for creating, updating, retrieving, and integrating models and data.”

The Jama Connect platform is purpose-built to facilitate data sharing across various stakeholder disciplines and location boundaries. An easy to use web UI is used to capture and work with both data as well as the real-time decisions and feedback taking place around that data which keeps all stakeholders informed when change occurs and makes sure everyone gets the content they need—right when they need it. The result is that stakeholders can now access the most recent versions of data that engineers are working on without having to rely on document exports – the root of all waste.

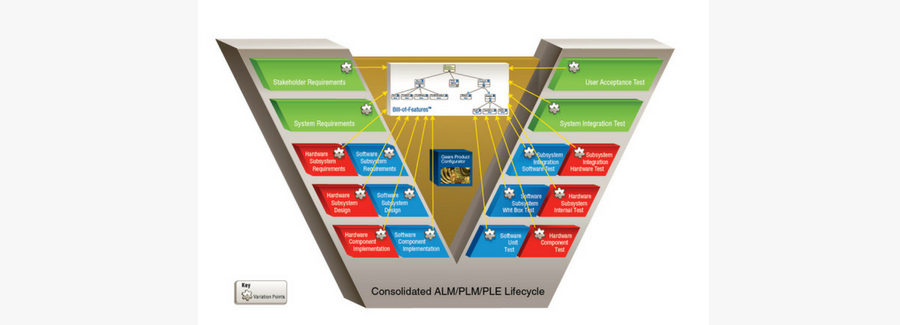

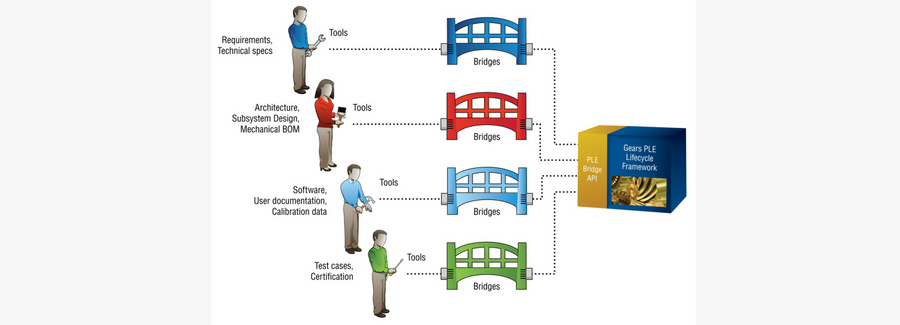

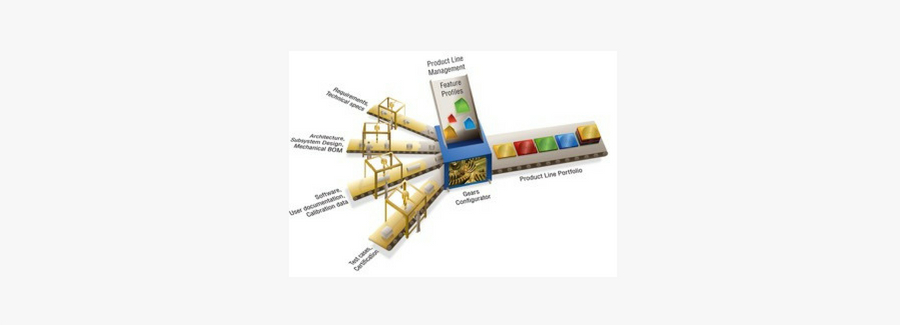

Fitting Within the Infrastructure

No one tool alone will fulfill the new Digital Engineering Strategy and so to avoid the same problem of stovepipes that documents bring, the infrastructure and the tools used must become more consolidated and collaborative. Tools whether it is a MBSE tool, software engineering tool, testing tool, or CAD tool needs to have its data easily integrated with the rest of the tools within the infrastructure. The “DoD’s strategy is to focus on standards, data, formats, and interfaces between tools rather than being constrained to particular tools.” This is probably the most difficult of all goals to achieve, not because of the lack of data exchange standards or capabilities provided by the vendors, but simply because of the distributed nature of the development teams themselves.

In a contractor supply chain model, customers and their contractors perform their work on their own networks which are disconnected from each other. And even when working solely at a government site, classification levels force segregation of data onto different networks. Strategies to deal with integrating the data in the complex environment must be planned for.

Jama Connect’s foundational architecture eases integration within the tool ecosystem. It has a built-in RESTful API and supports the industry standards OSLC and ReqIF formats for exchanging data. These important capabilities mean that when organizations go to integrate their digital engineering tools, numerous readily available 3rd party integration platforms are available; and in many cases already have templates to connect the endpoints in place.

Parting Words

There isn’t a single silver bullet to digital engineering, but I hope you leave this post with some ideas, some encouragement, and maybe some new determination to start your digital engineering journey.

To learn more on the topic of requirements management, we’ve curated some of our best resources for you here.

This article is Part 2 of a two-part series by our friends at

This article is Part 2 of a two-part series by our friends at