![[Webinar Recap] Navigating AI Safety with ISO 8800: Requirements Management Best Practices [Webinar Recap] Navigating AI Safety with ISO 8800: Requirements Management Best Practices](https://www.jamasoftware.com/media/2025/04/Navigating-AI-Safety-with-ISO-8800.png)

In this blog, we recap our recent webinar, “Navigating AI Safety with ISO 8800: Requirements Management Best Practices”

[Webinar Recap] Navigating AI Safety with ISO 8800: Requirements Management Best Practices

As artificial intelligence (AI) becomes increasingly embedded in automotive and semiconductor applications, ensuring its safety is critical.

In this webinar recap, Matt Mickle from Jama Software and Jody Nelson from SecuRESafe (SRES) dive into the newly introduced ISO/PAS 8800 provides a framework for managing AI-related safety requirements in road vehicles, addressing the challenges of functional safety, system reliability, and risk mitigation.

What You’ll Learn:

- The importance and framework of ISO/PAS 8800 for AI safety in road vehicles

- How to derive and manage AI safety requirements effectively

- Addressing insufficiencies in AI systems and ensuring traceability to related standards

- Practical strategies for integrating ISO 8800 into a structured requirements and systems engineering workflow

Below is an abbreviated transcript of our webinar.

Jody Nelson: Appreciate the invitation from Jama Software for this discussion. I think it’s a very important topic as we’re going to be talking about a newly released standard, the ISO/PAS 8800. And our agenda for today, we’re going to first start out and talk about the framework and importance of the 8800. In order to do this, we have to pull in other standards. So as we’ll discuss in this discussion, the 8800 is not a standalone standard. It does have dependencies on ISO 26262 and ISO 21448. So we’ll start out from some framework for ADAS, automated drive systems, and then we’ll go into deriving and managing AI safety requirements. And this is a very difficult topic to go through. So this is where it is really great in this partnership with Jama Software to walk through it with a requirements management tool because it’s much easier to see once we’re in a tool environment.

And we’ll talk about addressing insufficiencies. This is something that we talk about a lot in Safety of Intended Functionality (SOTIF.) Now, we’re going to drive that down into lower levels into the AI system, including down to the machine learning model level. And with all of these safety standards that we talk about and with all these aspects of safety, we need traceability. So we’ll talk about in 26262 traceability between requirements to verification testing to your safety analysis. And these are the aspects that we want to show in today’s webinar. And then we’ll actually jump into the tool itself and show you a practical example of how to use 8800 and just show that flow.

So before we get into that, I do want to lay out a little bit of an AI requirements landscape. And before we jump into the AI safety landscape, let’s take a step back because it’s very important that we harmonize and ground ourselves with where we’re at now prior to these AI safety standards.

Well, the Automotive Functional Safety Development, as most of you know, the ISO 26262, was released in November of 2011. We have this pyramid of development. And it’s very common, and one of the biggest advantages of 26262 is almost everything’s built into the standard. So we don’t look out of the standard much when we’re in the traditional functional safety world. It’s all built into the standard.

Well, we start out with this quality management system (QMS) layer, this quality management system layer, and that’s the one exception to that last statement. This is where we point out to an outside standard such as ISO 9001, IATF 16949. These are the most commonly used in automotive, and that sets up our basis for our quality management layer. So that’s setting the initial processes.

RELATED: Buyer’s Guide: Selecting a Requirements Management and Traceability Solution for Automotive

Nelson: But that’s not sufficient enough for safety. So we build on top of that functional safety processes, functional safety policies, which we call our functional safety management. And the majority of that is captured in Part 2 of the standard ISO 26262. So that’s the layer that we build on top of the QMS.

And then of course, we need a path forward. We need an understanding of the steps that we need to follow, and this is within our functional safety lifecycle. Again, this is built within the standard. We can jump into Part 2 of ISO 26262. It provides us an overall life cycle from concept phase all the way to decommissioning. So we’re talking about 15, 20-year lifetime.

And then on top of that is where we do the actual development, and that’s where in the standard’s Parts 3 through 7 goes into the concept phase, driving functional safety requirements, technical safety requirements, driving down into your hardware and software, and then coming back up to this V cycle where we do verification and eventually validation.

Now, this framework is well established. As I mentioned, since 2011, we’ve been following ISO 26262, and nearly the entire framework is built in. As we transition into autonomous drive and to AI safety, it gets a little bit less clear and less straightforward as this. So I readapted that first pyramid and looked at now the AI safety aspects of our development in automotive.

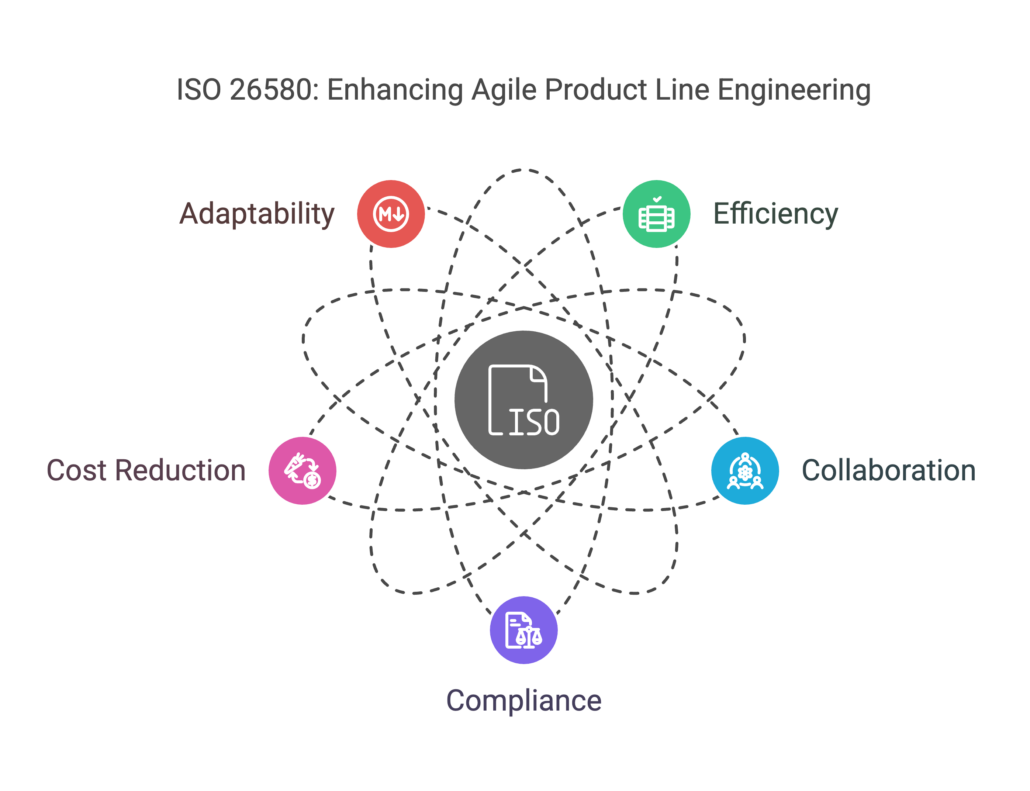

So in the bottom layer, we’re going to have to have an AI management system. So we’re still going to use our 16949 or 9001 QMS, but we need to extend beyond that. And what was released in 2023, late in 2023, is a standard called ISO/IEC 42001, which I’ll discuss briefly today. This sets up the nuances that we need to consider when we’re talking about AI, data governance, ethical concerns. All these kinds of responsible AI aspects are included into this framework of the 42001. 42001 is not meant to replace 16949. It’s meant to play with it to work together with your QMS. So it’s not about getting rid of your QMS processes. It’s about adding in the inclusions for our concerns or relevance with AI.

Well, just as we had in functional safety, we have to build in an AI safety management on top of that. Now, we’re going to start pulling in, for example, ISO/PAS 8800 that we’ll be talking about today, but in conjunction with the 26262 because 26262 still helps us establish the safety management. 8800 gives us the specific aspects of AI to that.

RELATED: Compliance Made Easy with Jama Connect® for Automotive and Semiconductor Development

Nelson: And then our lifecycle, we will be following aspects of 26262 lifecycle, but also SOTIF. So the ISO 21448 will be a critical aspect as well because we’re going to be combining both of these ideas into what will lead into the aspects that we need for the ISO 8800. So all three of those will be incorporated to build in this AI safety lifecycle.

Then of course, for the AI safety development, we’re going to have aspects of 26262. We’re going to have aspects of SOTIF, 8800 as we discussed today. And then we have some kind of complementary standards that will help us round this out. The ISO/IEC 5469, this will be replaced by an actual technical standard in the future. But as of now, this is a technical report. It is informal so it provides us only guidance that there’s no shells or requirements in it, but it’s going to help us. And we’ll see in the 8800 as you go through the standard, it points out to 5469 in some cases. And then soon to be released or currently released, the ISO 5083, which will be a replacement to the ISO 4804. This will help again align to ISO 26262 to that V cycle, that V-Model that we’re commonly used in 26262 world. But help us with more of the verification, validation activities in autonomous drive.

So I called this the new automotive model. As I mentioned before, we do have to point out to a few other standards. I do understand there is in some cases standards fatigue. We’re trying to boil this down into the most condensed version that we can present here.

So just briefly, I’ll look into a couple of these standards. As I mentioned ISO/IEC 42001, if you’re not familiar with this, it was released late in December of 2023. It is agnostic to industry, it’s agnostic to size of company, and it’s for both organizations that use AI or that develop AI. So it’s a very broad standard. Again, it is our QMS layer, but with the specific aspects of AI that we need to talk about. So it helps us ensure this responsible development of using AI systems. It does address ethical considerations, transparency, safety, and security, and it does provide a risk-based approach. Most of our functional safety standards and safety standards that we talk about in automotive are a risk-based approach. So within 42001, we talk about risk analysis, risk assessments, risk treatment, how we’re going to control these risks, and then an impact assessment of the overall risks that remain. So that’s our bottom layer.

And then I just wanted to point out the ISO/IEC 5469. Again, this is informative, meaning there’s no shells in the standard or in the technical report. It just provides us guidance and draws in this connection between functional safety and using AI systems either as a safety mechanism or somehow the AI system can impact safety.